LIVE 7 days ago

Some states have started to regulate apps that offer AI "therapy" as more and more people turn to AI for mental health advice. This is because there isn't enough federal regulation.

But the laws, which were all passed this year, don't fully deal with how quickly AI software development is changing. App developers, policymakers, and mental health advocates all agree that the patchwork of state laws that have come about isn't enough to protect users or hold the people who make harmful technology accountable.

Karin Andrea Stephan, CEO and co-founder of the mental health chatbot app Earkick, said, "The truth is that millions of people are using these tools and they aren't going back."

The laws in each state are different. Illinois and Nevada have made it illegal to use AI to help with mental health. Utah put some rules in place for therapy chatbots, such as making sure they keep users' health information safe and making it clear that the chatbot is not a real person. Along with New York, New Jersey, and California, Pennsylvania is also looking into how to regulate AI therapy.

Different users are affected in different ways. Some apps won't let people in states with bans use them. Some people say they won't make any changes until they get more legal information.

And a lot of the laws don't apply to generic chatbots like ChatGPT, which aren't marketed as therapy tools but are used for that by a lot of people. In terrible cases, people have sued those bots because they lost touch with reality or killed themselves after using them.

Vaile Wright, who is in charge of health care innovation at the American Psychological Association, said that the apps could help meet a need because there aren't enough mental health providers in the country, care is expensive, and insured patients don't always have easy access to it.

Wright said that mental health chatbots based on science, made with input from experts, and watched over by people could change the way things are now.

"This could be something that helps people before they get to a crisis," she said. "That's not what's for sale on the market right now."

She said that is why there needs to be federal regulation and oversight.

The Federal Trade Commission said earlier this month that it was looking into seven AI chatbot companies, including the owners of Instagram and instagram, Google, ChatGPT, Grok (the chatbot on X), Character.AI, and Snapchat. They want to know how these companies "measure, test, and monitor potentially negative impacts of this technology on children and teens." The Food and Drug Administration is also getting together an advisory committee on November 6 to look at mental health devices that use generative AI.

Wright said that federal agencies could think about limiting how chatbots are marketed, stopping addictive behaviors, making companies tell users that they are not medical providers, making companies keep track of and report suicidal thoughts, and giving people who report bad company practices legal protections.

AI is used in mental health care in a lot of different ways, like "companion apps," "AI therapists," and "mental wellness" apps. It's hard to define and even harder to write laws about.

That has caused different ways of regulating things. Some states, for instance, go after companion apps that are only for making friends and don't deal with mental health issues. In Illinois and Nevada, it's against the law for products to say they can treat mental health issues. In Illinois, you could face fines of up to $10,000, and in Nevada, you could face fines of up to $15,000.

But it can be hard to put even one app into a category.

Stephan from Earkick said that Illinois' law is still "very muddy" in a lot of ways, and the company hasn't limited access there.

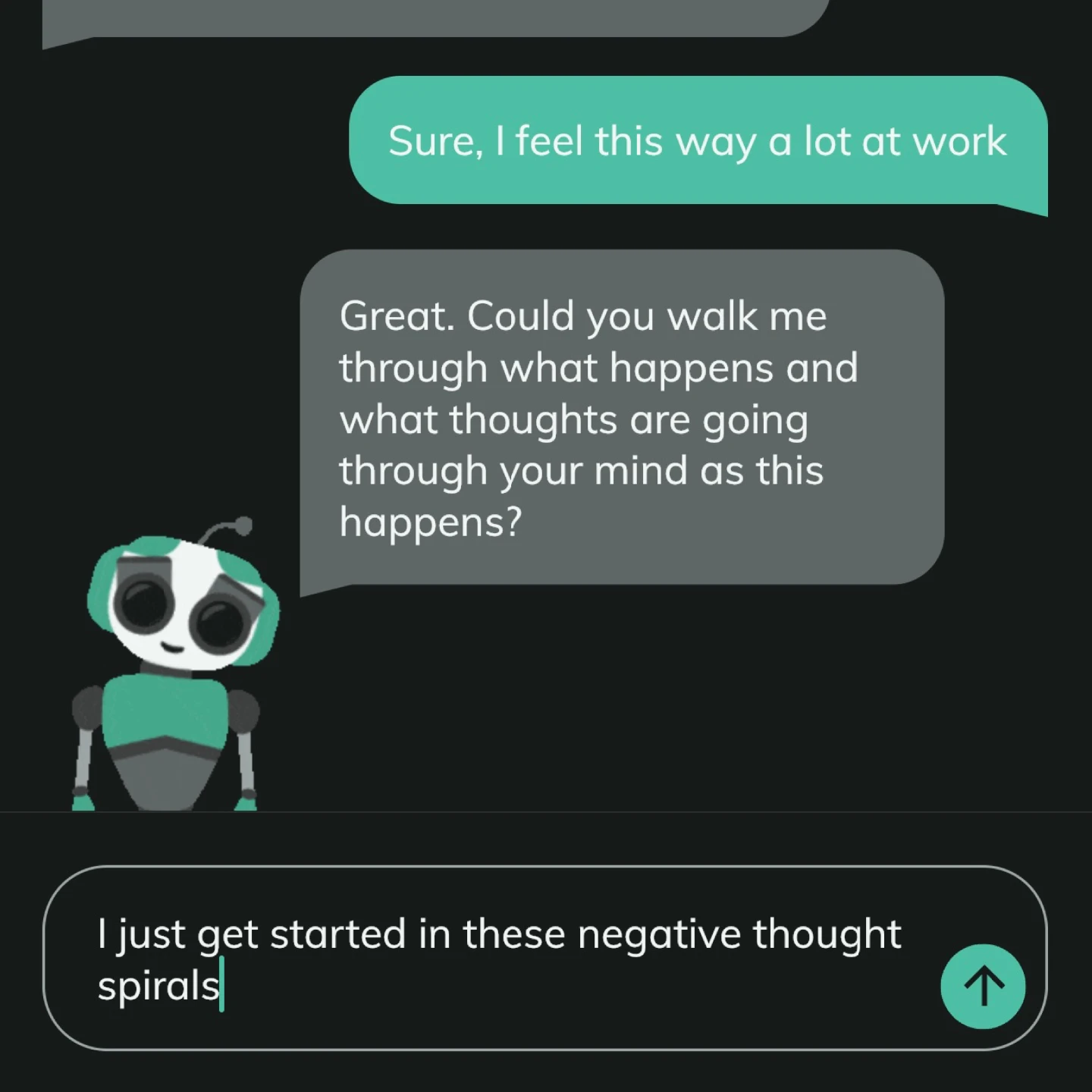

At first, Stephan and her team didn't want to call their chatbot, which looks like a cartoon panda, a therapist. But when users began using the word in reviews, they embraced the terminology so the app would show up in searches.

Last week, they stopped using medical and therapy terms again. Earkick's website used to call its chatbot "Your empathetic AI counselor, equipped to support your mental health journey." Now it calls it a "chatbot for self-care."

Stephan still said, "we're not diagnosing."

Users can set up a "panic button" to call a trusted loved one if they are in trouble, and the chatbot will "nudge" them to see a therapist if their mental health gets worse. Stephan said that the app was never meant to stop people from killing themselves, and the police would not be called if someone told the bot they were thinking about hurting themselves.

Stephan said she's glad that people are being careful with AI, but she's worried that states won't be able to keep up with new technology.

She said, "Things are changing at a very fast pace."